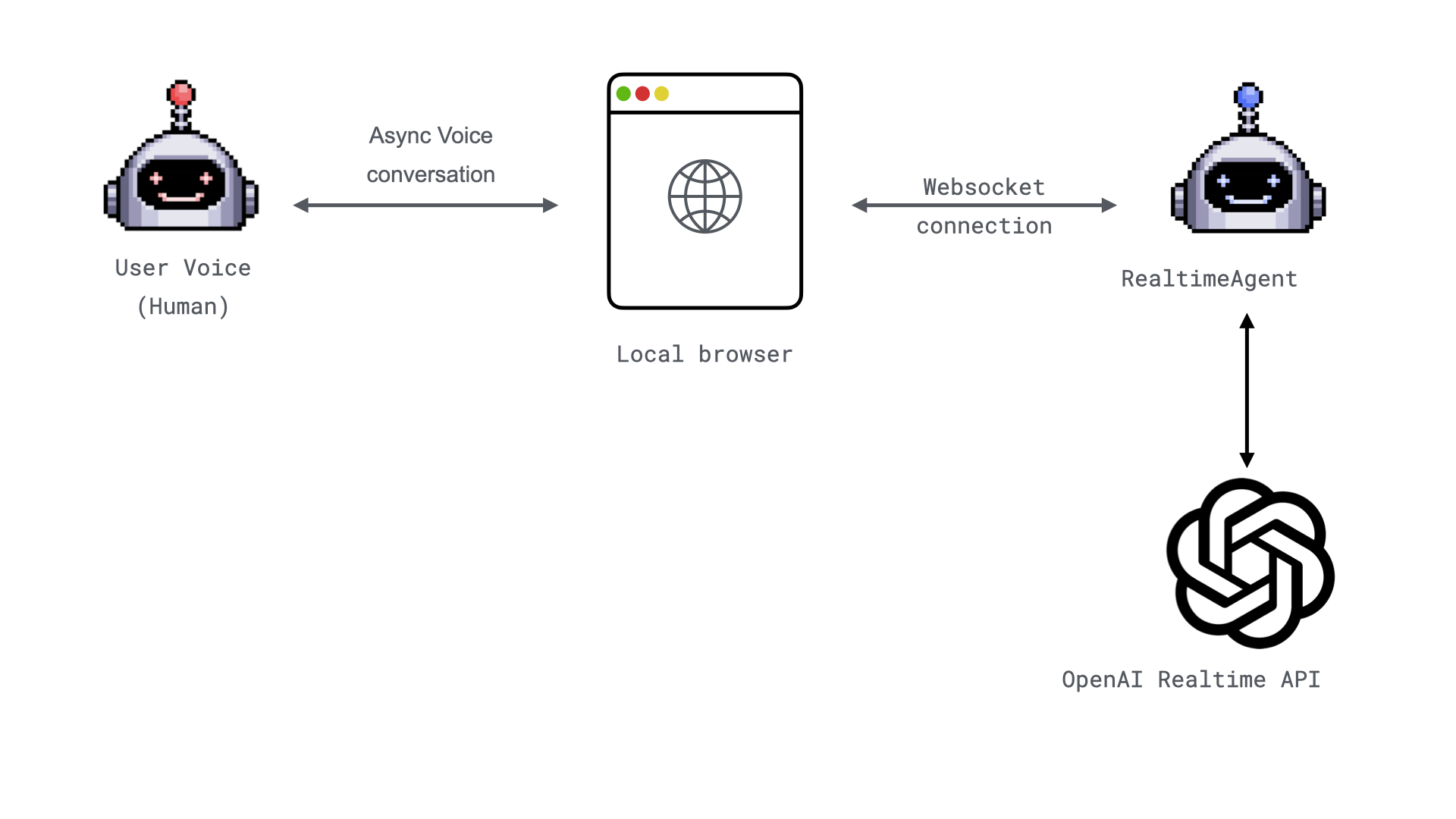

Real-Time Voice Interactions with the WebSocket Audio Adapter

TL;DR: - Demo implementation: Implement a website using websockets and communicate using voice with the RealtimeAgent - Introducing WebSocketAudioAdapter: Stream audio directly from your browser using WebSockets. - Simplified Development: Connect to real-time agents quickly and effortlessly with minimal setup.

Realtime over WebSockets

In our previous blog post, we introduced a way to interact with the RealtimeAgent using TwilioAudioAdapter. While effective, this approach required a setup-intensive process involving Twilio integration, account configuration, number forwarding, and other complexities. Today, we're excited to introduce theWebSocketAudioAdapter, a streamlined approach to real-time audio streaming directly via a web browser.

This post explores the features, benefits, and implementation of the WebSocketAudioAdapter, showing how it transforms the way we connect with real-time agents.